Cybersecurity Challenges in IoT Implementation: Risks and Mitigation Strategies

Introduction to IoT and Cybersecurity The Internet of Things (IoT) has emerged as a transformative force in the digital landscape, connecting an unprecedented number of devices and enabling seamless communication between them. As businesses, homes, and public infrastructures increasingly integrate IoT devices, their potential to enhance efficiency, streamline processes, and drive innovation is significant. For…

Latest Posts

Cybersecurity Challenges in IoT Implementation: Risks and Mitigation Strategies

Introduction to IoT and Cybersecurity The Internet of Things (IoT) has…

BY

How Generative AI is Revolutionizing the Creative Industry

Introduction to Generative AI and Its Impact Generative AI refers to…

BY

Cyberdyne’s Adoption of ROS: Revolutionizing Automation with Ubuntu and Snaps

Introduction to Cyberdyne and ROS Cyberdyne Inc. has emerged as a…

BY

The Quantum Leap: Enhancing Artificial Intelligence through Quantum Computing

Introduction to Quantum Computing and AI In recent years, the fields…

BY

Creating Biomechanical Masterpieces: Crafting Digital Art in a Futuristic Realm

Introduction to Biomechanical Art Biomechanical art represents a captivating intersection between…

BY

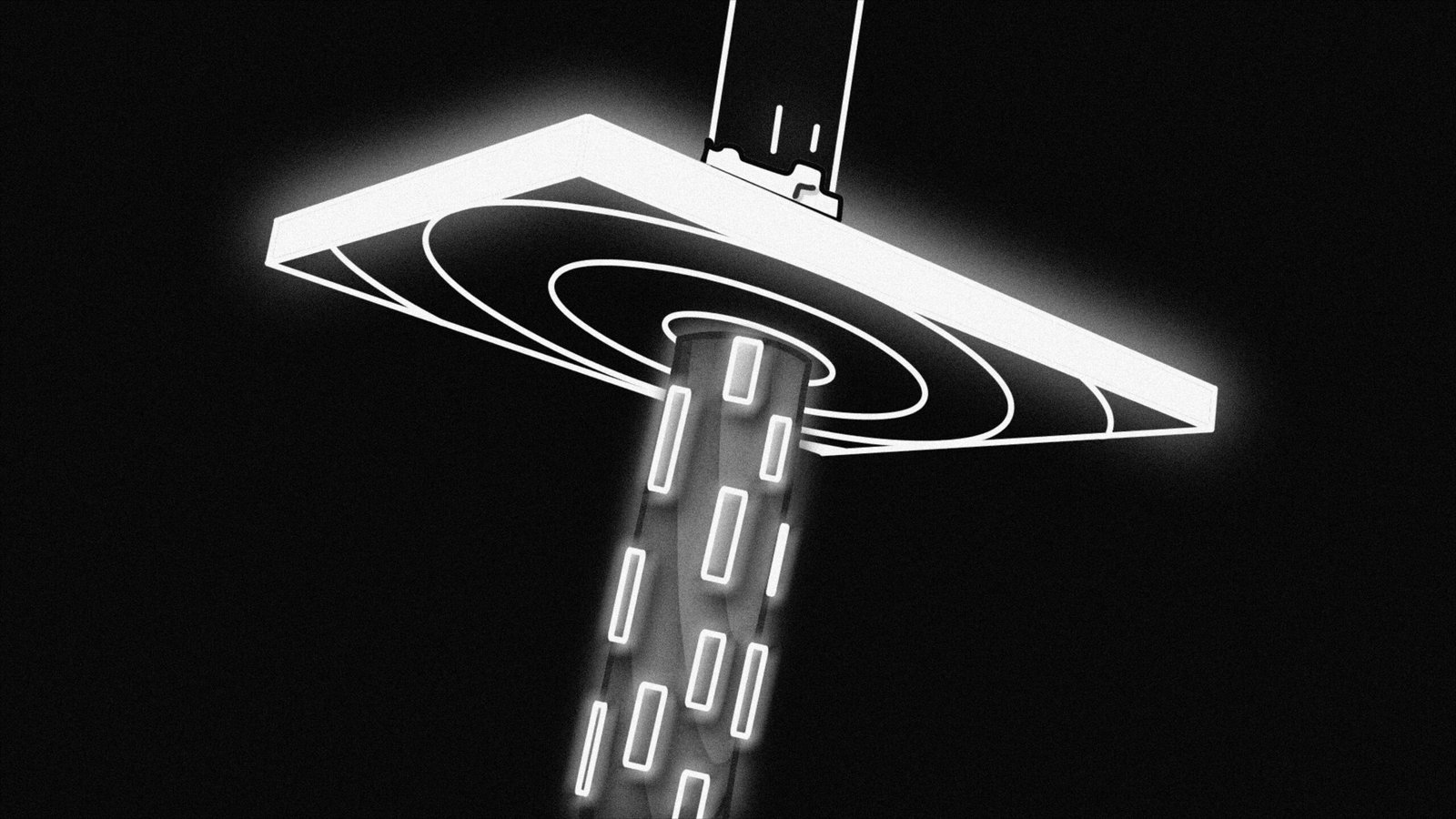

How Cloud Connectivity Powers IoT: A High-Quality Visual Representation

Introduction to Cloud Connectivity in IoT Cloud connectivity in the Internet…

BY

Hi,

I am DR. MASA

I’m the founder of Rookie Bytes. With a passion for technology and education, I created this platform to bridge the gap between curiosity and mastery in the tech world.